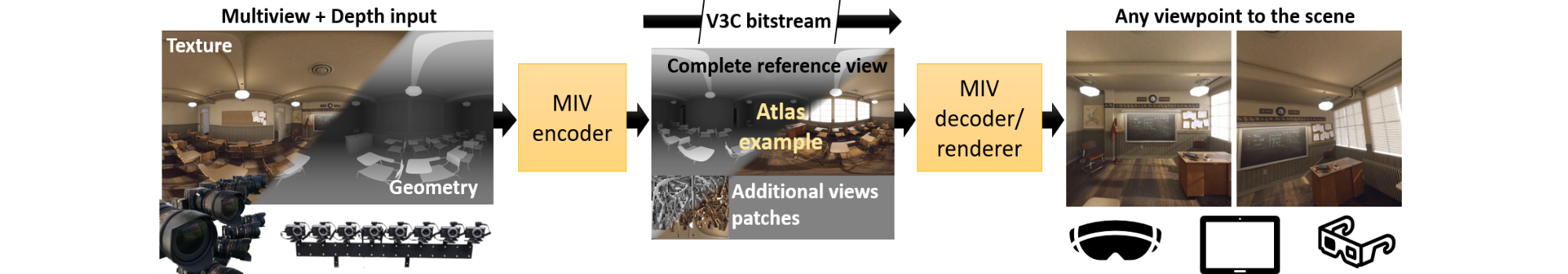

At the 135th MPEG meeting, MPEG Video Coding has promoted the MPEG Immersive Video (MIV) standard to the Final Draft International Standard (FDIS) stage. MIV was developed to support compression of immersive video content in which multiple real or virtual cameras capture a real or virtual 3D scene. The standard enables storage and distribution of immersive video content over existing and future networks for playback with 6 Degrees of Freedom (6DoF) of view position and orientation.

MIV is a flexible standard for multi-view video with depth (MVD) that leverages the strong hardware support for commonly used video codecs to code volumetric video. Views may use equirectangular, perspective or orthographic projection. By packing and pruning views, MIV is able to achieve bitrates around 25 Mb/s for HEVC and a pixel rate equivalent to HEVC Level 5.2. Besides the MIV Main profile for MVD, there is the MIV Geometry absent profile, suitable for cloud-based and decoder-side depth estimation, and the MIV Extended profile, which enables coding of multi-plane images (MPI).

The MIV standard is designed as a set of extensions and profile restriction on the second edition of the Visual Volumetric Video-based Coding (V3C) standard (ISO/IEC 23090-5). This standard is shared between MIV and the Video-based Point Cloud Coding (V-PCC) standard (ISO/IEC 23090-5 Annex H), and may potentially be used by other MPEG-I volumetric codecs under development.

The carriage of MIV is specified through the Carriage of V3C Data standard (ISO/IEC 23090-10).

Work on MIV conformance & reference software (ISO/IEC 23090-23) and verification tests is ongoing. The test model and objective metrics are publicly available at https://gitlab.com/mpeg-i-visual.

Finally, a number of so-called Exploratory Experiments have also been launched on diverse investigations such as refined depth generation, benchmarks with MV-HEVC (Multi-View High Efficiency Video Coding) or ML-VVC (Multi-Layer Versatile Video Coding), processing on specular materials or profile based on depth generation at the client side.