“The MPEG Immersive Video Standard—Current Status and Future Outlook” in IEEE MultiMedia, 2022

by Vinod Kumar Malamal Vadakital, Adrian Dziembowski, Gauthier Lafruit, Franck Thudor, Gwangsoon Lee and Patrice Rondao Alface

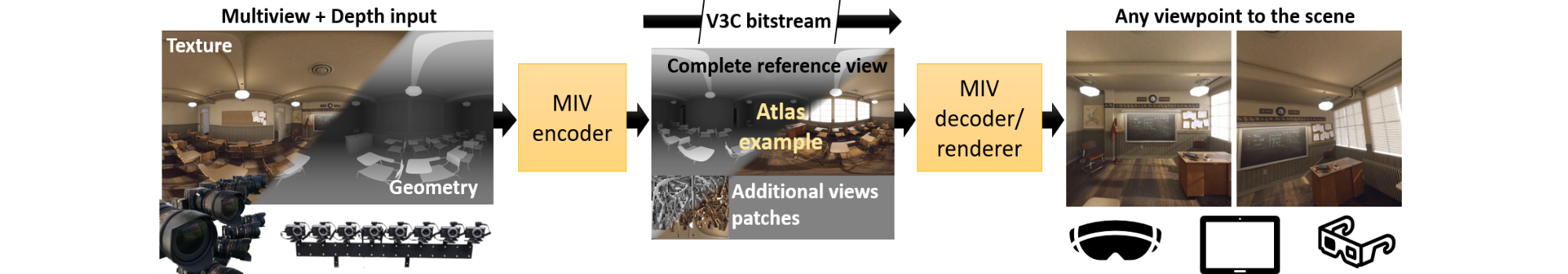

Abstract: The MPEG immersive video (MIV) standard is the latest addition to the MPEG-I suite of standards. It focuses on the representation and coding of immersive media. MIV is designed to support virtual and extended reality applications that require six degrees of freedom visual interaction with the rendered scene. Edition-1 of MIV is now in its final phase of standardization. Leveraging conventional 2-D video codecs, the MIV standard efficiently codes volumetric scenes and allows advanced visual effects like bullet-time fly-throughs. The video feeds capturing the scene are first processed to identify a set of basic views that are augmented with additional information from all other views. The data are then intelligently packed into atlases and further compressed with any existing 2-D video codec of choice. Experimental results show BD-PSNR gains of up to 6 dB in the 10–20 Mbps range compared to a naive simulcast multiview video coding approach. This article concludes with an outlook on future extensions for the second edition of MIV.

DOI: 10.1109/MMUL.2022.3175654

“IV-PSNR – the objective quality metric for immersive video applications”, in IEEE Transactions on Circuits and Systems for Video Technology, 2022

by A. Dziembowski, D. Mieloch, J. Stankowski and A. Grzelka

Abstract: This paper presents a new objective quality metric that was adapted to the complex characteristics of immersive video (IV) which is prone to errors caused by processing and compression of multiple input views and virtual view synthesis. The proposed metric, IV-PSNR, contains two techniques that allow for the evaluation of quality loss for typical immersive video distortions: corresponding pixel shift and global component difference. The performed experiments compared the proposal with 31 state-of-the-art quality metrics, showing their performance in the assessment of quality in immersive video coding and processing, and in other applications, using commonly used image quality assessment databases – TID2013 and CVIQ. As presented, IV-PSNR outperforms other metrics in immersive video applications and still can be efficiently used in the evaluation of different images and videos. Moreover, basing the metric on the calculation of PSNR allowed the computational complexity to remain low. Publicly available, efficient implementation of IV-PSNR software was provided by the authors of this paper and is used by ISO/IEC MPEG for evaluation and research on the upcoming MPEG Immersive video (MIV) coding standard.

DOI: 10.1109/TCSVT.2022.3179575

“Overview and Efficiency of Decoder-Side Depth Estimation in MPEG Immersive Video”, IEEE Transactions on Circuits and Systems for Video Processing, 2022

by Dawid Mieloch, Patrick Garus, Marta Milovanović, Joël Jung, Jun Young Jeong, Smitha Lingadahalli Ravi, Basel Salahieh

Abstract: This paper presents the overview and rationale behind the Decoder-Side Depth Estimation (DSDE) mode of the MPEG Immersive Video (MIV) standard, using the Geometry Absent profile, for efficient compression of immersive multiview video. A MIV bitstream generated by an encoder operating in the DSDE mode does not include depth maps. It only contains the information required to reconstruct them in the client or in the cloud: decoded views and metadata. The paper explains the technical details and techniques supported by this novel MIV DSDE mode. The description additionally includes the specification on Geometry Assistance Supplemental Enhancement Information which helps to reduce the complexity of depth estimation, when performed in the cloud or at the decoder side. The depth estimation in MIV is a non-normative part of the decoding process, therefore, any method can be used to compute the depth maps. This paper lists a set of requirements for depth estimation, induced by the specific characteristics of the DSDE. The depth estimation reference software, continuously and collaboratively developed with MIV to meet these requirements, is presented in this paper. Several original experimental results are presented. The efficiency of the DSDE is compared to two MIV profiles. The combined non-transmission of depth maps and efficient coding of textures enabled by the DSDE leads to efficient compression and rendering quality improvement compared to the usual encoder-side depth estimation. Moreover, results of the first evaluation of state-of-the-art multiview depth estimators in the DSDE context, including machine learning techniques, are presented.

DOI: 10.1109/TCSVT.2022.3162916

Tutorial on MIV at VCIP 2021

Presenters:

Bart Kroon, Philips Research Eindhoven, Netherlands

Dawid Mieloch, Poznań University of Technology, Poland

Gauthier Lafruit, Université Libre de Bruxelles / Brussels University, Belgium

Abstract: The tutorial gives a high-level overview of the MPEG Immersive Video (MIV) coding standard for compressing data from multiple cameras in view of supporting VR free navigation and light field applications. The MIV coding standard (https://mpeg-miv.org) is video codec agnostic, i.e. it consists of a pre- and post-processing shell around existing codecs, like AVC, EVC, HEVC and VVC. Consequently, no coding details like DCT block coding and/or motion vectors will be presented, but rather high-level concepts about how to prepare multiview+depth video sequences to be handled by MIV. Relations with other parts of the MPEG-I standard (“I” refers to “Immersive”), e.g. point cloud coding with V-PCC, and streaming with DASH, will also be covered.

Link to tutorial parts: Part 1, Demo break, Part 2 a, Part 2 b

“MPEG Immersive Video Coding Standard”, Proceedings of the IEEE 2021

by Jill M. Boyce, Renaud Doré, Adrian Dziembowski, Julien Fleureau, Joel Jung, Bart Kroon, Basel Salahieh, Vinod Kumar Malamal Vadakital, Lu Yu

Abstract: This article introduces the ISO/IEC MPEG Immersive Video (MIV) standard, MPEG-I Part 12, which is undergoing standardization. The draft MIV standard provides support for viewing immersive volumetric content captured by multiple cameras with six degrees of freedom (6DoF) within a viewing space that is determined by the camera arrangement in the capture rig. The bitstream format and decoding processes of the draft specification along with aspects of the Test Model for Immersive Video (TMIV) reference software encoder, decoder, and renderer are described. The use cases, test conditions, quality assessment methods, and experimental results are provided. In the TMIV, multiple texture and geometry views are coded as atlases of patches using a legacy 2-D video codec, while optimizing for bitrate, pixel rate, and quality. The design of the bitstream format and decoder is based on the visual volumetric video-based coding (V3C) and video-based point cloud compression (V-PCC) standard, MPEG-I Part 5.

DOI: 10.1109/JPROC.2021.3062590

“Video Based Coding of Volumetric Data”, IEEE International Conference on Image Processing (ICIP), October 2020

by Danillo B. Graziosi & Bart Kroon

Abstract: New standards are emerging for the coding of volumetric 3D data such as immersive video and point clouds. Some of these volumetric encoders similarly utilize video codecs as the core of their compression approach, but apply different techniques to convert volumetric 3D data into 2D content for subsequent 2D video compression. Currently in MPEG there are two activities that follow this paradigm: ISO/IEC 23090-5 Video-based Point Cloud Compression (V-PCC) and ISO/IEC 23090-12 MPEG Immersive Video (MIV). In this article we propose for both standards to define 2D projection as common transmission format. We then describe a procedure based on camera projections that is applicable to both standards to convert 3D information into 2D images for efficient 2D compression. Results show that our approach successfully encodes both point clouds and immersive video content with the same performance as the current test models that MPEG experts developed separately for the respective standards. We conclude the article by discussing further integration steps and future directions.

DOI: 10.1109/ICIP40778.2020.9190689

“An Immersive Video Experience with Real-Time View Synthesis Leveraging the Upcoming MIV Distribution Standard”, International Conference on Multimedia & Expo Workshops, July 2020

by Julien Fleureau, Bertrand Chupeau, Franck Thudor, Gérard Briand, Thierry Tapie, Renaud Doré

Abstract: The upcoming MPEG Immersive Video (MIV) standard will enable storage and distribution of immersive video content over existing and future networks, for playback with 6 full or partial degrees of freedom of view position and orientation. The demo showcases a VOD server streaming MIV encoded immersive video contents up to a decoding client where an HMD is connected. The user can perceive the parallax as he moves his head (translation, rotation) when seated. The core contribution of the demo is a real-time GPU implementation of the virtual view synthesis at decoder side. An enhanced quality of rendered views is attained through a weighting strategy of source views contributions which leverages standardized metadata conveying information on the pruning decisions at encoder side.

DOI: 10.1109/ICMEW46912.2020.9105948

“Understanding MPEG-I Coding Standardization in Immersive VR/AR Applications”, SMPTE motion imaging journal, November 2019

by Gauthier Lafruit, Daniele Bonatto, Christian Tulvan, Marius Preda, Lu Yu

Vol.128, No. 10, pp. 33-39, ISSN:1545-0279.

Abstract: After decennia of developing leading-edge 2D video compression technologies, the Moving Picture Expert Group (MPEG) is currently working on the new era of coding for immersive applications, referred to as MPEG-I, where “I” refers to the “Immersive” aspects. It ranges from 360° video with head-mounted displays to free navigation in 3D space with head-mounted and 3D light field displays. Two families of coding approaches, covering typical industrial workflows, are currently considered for standardization—MultiView + Depth (MVD) Video Coding and Point Cloud Coding—both supporting high-quality rendering at bitrates of up to a couple of hundreds of megabits per second. This paper provides a technical/historical overview of the acquisition, coding, and rendering technologies considered in the MPEG-I standardization activities.

“Standardisation Coding on Immersive Video Coding, IEEE journal on Emerging & Selected Topics in Circuits and Systems”, February 2019

by Matthias Wien, Jill Boyce, Thomas Stockhammer, Wen-Hsiao Peng

Abstract: Based on increasing availability of capture and display devices dedicated to immersive media, coding and transmission of these media has recently become a highest-priority subject of standardization. Different levels of immersiveness are defined with respect to an increasing degree of freedom in terms of movements of the observer within the immersive media scene. The level ranges from three degrees of freedom (3DoF) allowing the user to look around in all directions from a fixed point of view to six degrees of freedom (6DoF), where the user can freely alter the viewpoint within the immersive media scene. The Moving Pictures Experts Group (MPEG) of ISO/IEC is developing a standards suite on “Coded Representation of Immersive Media,” called MPEG-I, to provide technical solutions for building blocks of the media transmission chain, ranging from architecture, systems tools, coding of video and audio signals, to point clouds and timed text. In this paper, an overview on recent and ongoing standardization efforts in this area is presented. While some specifications, such as High Efficiency Video Coding (HEVC) or version 1 of the Omnidirectional Media Format (OMAF), are already available, other activities are under development or in the exploration phase. This paper addresses the status of these efforts with a focus on video signals, indicates the development timelines, summarizes the main technical details and provides pointers to further points of reference.