What is MIV ?

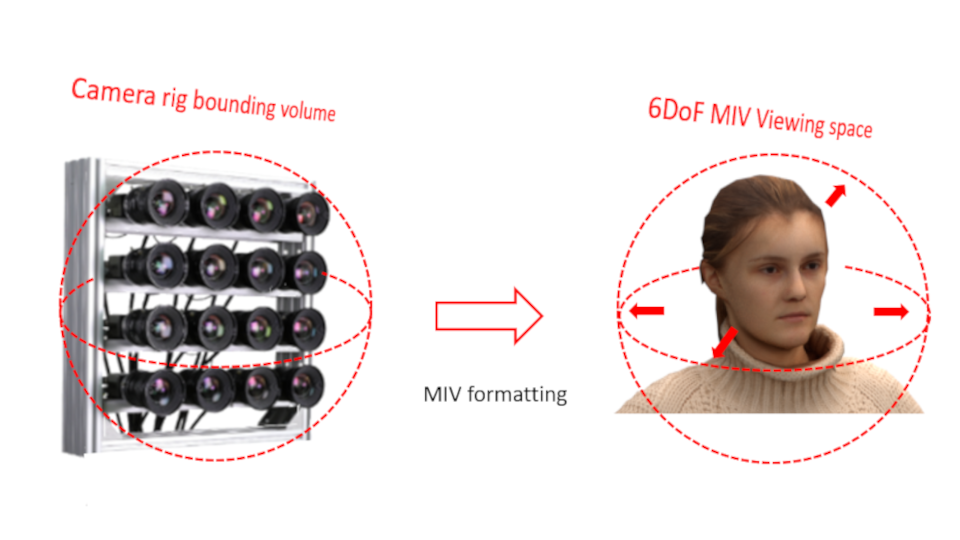

The MPEG Immersive video (MIV) standard features the compression of immersive video content, also known as volumetric video, in which a real or virtual 3D scene is captured by multiple real or virtual cameras. It enables storage and distribution of immersive video content over existing and future networks, for playback with 6 degrees of freedom (6 DoF) of view position and orientation within a limited viewing space and with different fields of view depending on the capture setup. The camera rig may be a flat setup of co-aligned cameras, disposed linearly to allow significant lateral displacement or divergent for a large field of view adapted to a viewing with a head mounted display (HMD). Compared to a flat omnidirectional 2D video experience, MIV promises an unprecedented level of visual comfort and immersiveness.

Standardisation context and roadmap

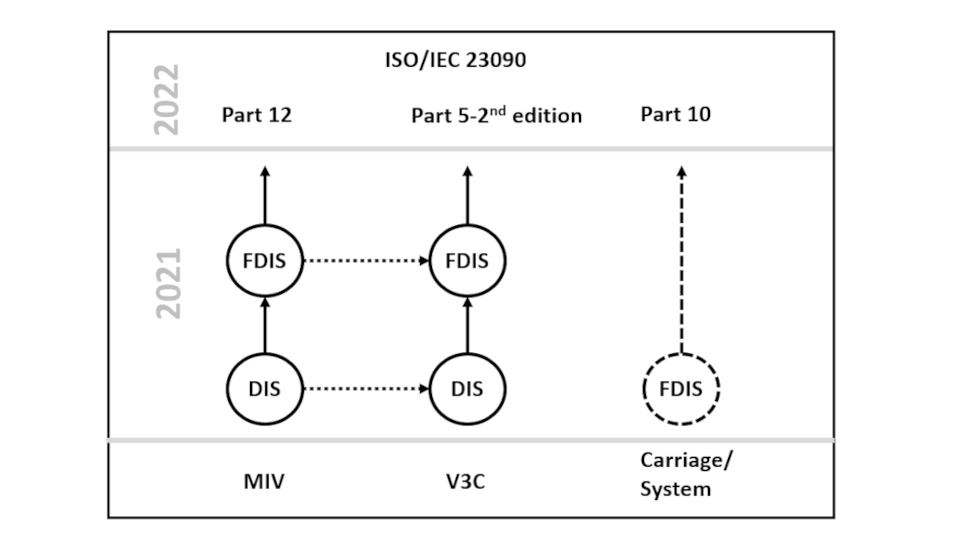

The MIV standard is part of ISO/IEC 23090 MPEG-I, a collection of standards to digitally represent immersive media. It has reached Draft International Standard (DIS) status in the January 2021 meeting, which should correspond to a Final Draft International Standard (FDIS) to be delivered for publication to ISO as the ISO/IEC 23090-12 specification in Q3 of the same year. In 2020, the MIV standard has been aligned with the Video-based Point Cloud Compression (V-PCC) MPEG standard (ISO/IEC 23090-5) because of many technical commonalities, leading to a restructuring of both specifications. As a result, the MIV standard references the common part of ISO/IEC 23090-5 2nd edition Visual Volumetric Video-based Coding (V3C) [2] – also with DIS status – with V-PCC an annex H of that document. V3C provides extension mechanisms for V‑PCC and MIV. Finally, SC 29/WG 03 MPEG Systems has also developed a systems standard based on ISOBMFF for the carriage of V3C data (ISO/IEC 23090-10) [3] which reached the Final Draft International Standard (FDIS) stage in January 2021.

Key design principles

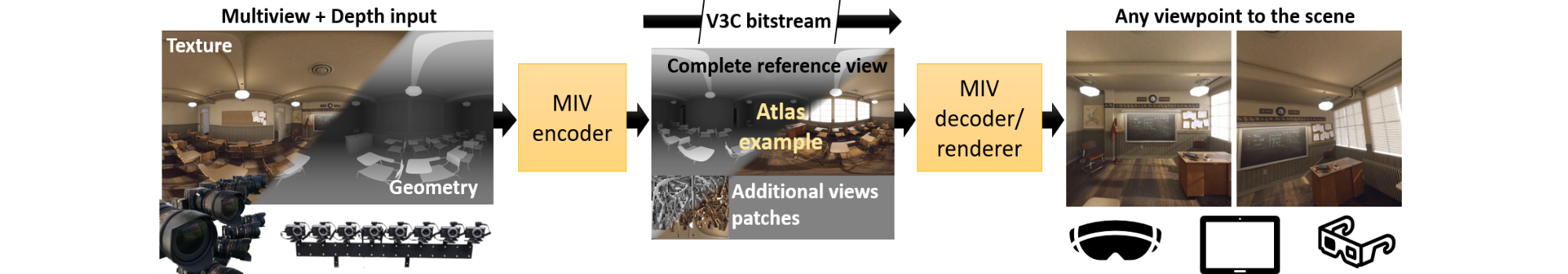

The core of the MIV design is to leverage video compression technologies and its end-to-end ecosystem. Since V3C is video-codec agnostic, the MIV standard today uses HEVC technology but will be able to switch to new generation codecs seamlessly. The video compression technology applies to geometry information (depth) and attribute information such as texture and transparency. This information is generated by a process removing the redundancy between the multiple camera inputs and leading to patch atlases, such as the one figuring on the left. Diverse processes of atlas creation are possible. The MIV specification includes a number of features such as group-based encoding, Multiple Plane Image (MPI) where geometry is replaced by transparency, entity-based coding, inpainting information, spatial and temporal access, etc. Typical bandwidths ranges from a couple of Mbps to couple of tens of Mbps, as simulated in MIV reference software, and several demos with real-time decoder have been done in a broadcast or a broadband configuration. For the latter case, the Carriage of V3C data systems specification offers a way to implement mechanisms like DASH adaptive streaming strategy.

Video-based visual volumetric coding (V3C)

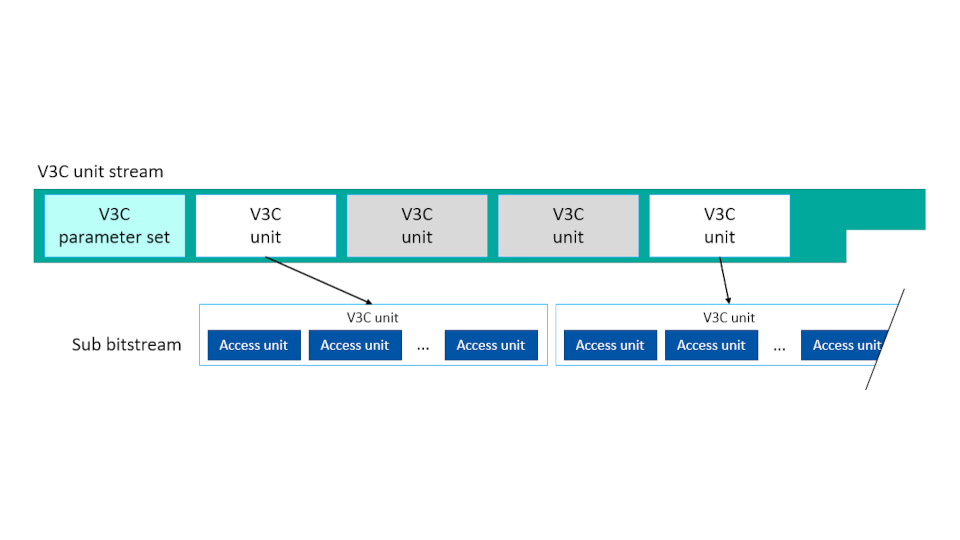

V-PCC and MIV share a common bitstream format (V3C). The V-PCC and MIV specifications define a set of extensions and profiles in reference to V3C. For instance, MIV supports multiple atlases and view parameters while V-PCC supports point reconstruction data. For each atlas, geometry, occupancy and/or attribute information is carried in video sub-bitstreams in parallel with an atlas sub-bitstream that includes the patch parameters. View parameters (camera extrinsics, intrinsics, resolution, etc.) are carried in a common atlas sub-bitstream that is shared between the atlases.

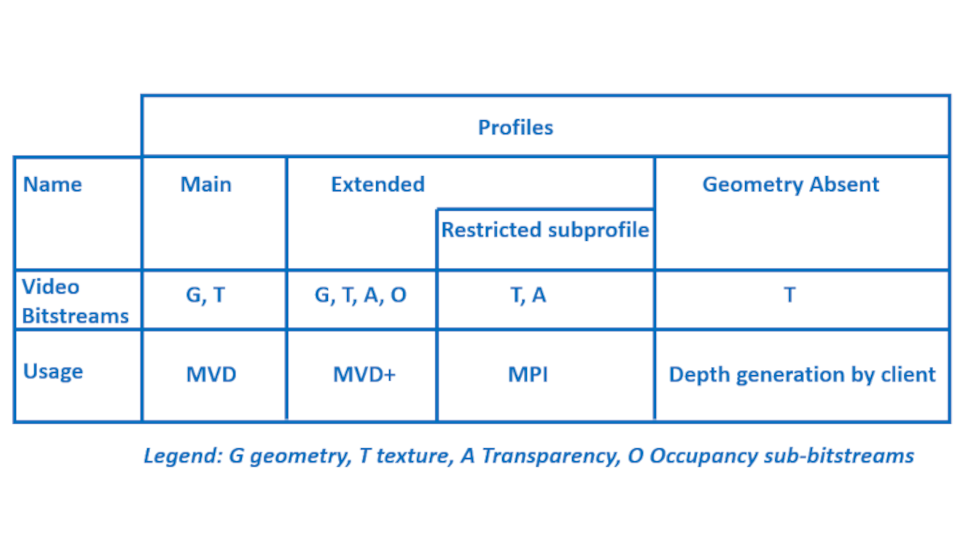

Profiles

The MIV standard comes with a reduced set of profiles to be adapted to different grades of bandwidth or decoding resources on the client side. Beside the Main profile based on geometry with embedded occupancy and texture and often named MVD (for multiple video + depth), another Extended profile possibly separates occupancy from geometry and may activate transparency attribute with a Restricted Geometry sub-profile allowing MPI format delivery. Finally, a last Geometry Absent profile allows the generation of geometry information at the client side.